To estimate the cost of building a website or an app, use our app cost calculator tool.

Generative AI is creating waves in healthcare, rapidly reshaping the industry. A recent survey highlighted that nearly 48% of U.S. consumers are comfortable with at least one generative AI application in healthcare.

While there is excitement as competitors add valuable AI-powered features to address patients' needs for more innovative tools, investors expect answers.

Business leaders seeking to capitalize on Gen AI's potential must also be aware of the risks inherent in applying it to patient care, given the large volumes of sensitive medical data involved.

This cannot be a blind rush to integrate generative AI into existing systems and apps, as it can lead to compliance violations, inaccurate outputs, or wasted budgets.

The goal of this article is to demystify what true readiness looks like for generative AI in healthcare. The focus is on creating real value, while protecting patient trust and maintaining compliance.

Let's get started.

How Do I Know If My Healthcare App Is Ready For Generative AI Integration?

If you already have a healthcare app that functions well and you want to integrate new AI features, seamless integration must be at the top of your list.

Let’s evaluate your app’s readiness across five dimensions:

1. Data Maturity

Data maturity is vital for ensuring that organizations don't face data quality problems. In fact, if not addressed, it can result in siloed information or even privacy concerns.

Thus, strengthening data maturity is essential, which can be achieved by standardizing formats (such as FHIR) and implementing robust integration pipelines.

You can ask:

- Is patient data unified and consistent across all sources?

- Has the data been cleaned, and is it accurate?

- When it comes to data access, is real-time or near-real-time access possible?

For generative AI to deliver accurate insights safely and compliantly, the groundwork must start with the data. Patient information needs to be unified, clean, and easily accessible.

👉 Here's a good example: let's say lab data and EHR records are not fully connected. In such cases, a generative AI model that summarizes a patient’s condition could miss essential lab results and give incomplete or harmful advice.

2. Compliance Foundation

Healthcare applications all deal with sensitive patient information, and it is a no-brainer that compliance with regulations like HIPAA and GDPR is not negotiable.

You therefore need a solid foundation of compliance before considering the addition of generative AI to your healthcare application.

Ask yourself:

- Are data protection mechanisms addressed?

- Are audit trails taken care of? Can all access, edit, or AI-created notes be traced for accountability?

- Are your privacy policies and consent procedures up to date? Do they conform to regional regulatory standards?

👉 Here's a great example: Without proper audit trails, AI-created notes might go undetected, which could turn mundane documentation into a compliance risk.

3. Technical Architecture

Generative AI needs a high-performance yet flexible technical setup that can support its heavy computational requirements. It also requires unhampered access to a wide range of data sources.

In such a scenario, a modular, API-friendly, and cloud-enabled design ensures that AI models can be modified without altering or affecting your current workflows. This approach helps preserve patient safety and maintain reliability.

Ask yourself:

- Is your app modular? Can components be replaced or updated without impact on the overall system?

- Is it API-friendly? Is your app well-suited to connect with external AI services such as GPT-based models?

- Is it cloud-enabled? Does your infrastructure have scalable computing and storage for AI workloads?

👉 This is a good example of how monolithic platforms often struggle to integrate GPT-based APIs without significant refactoring, leading to delays and increased costs.

4. Business Case Clarity

One more thing you can do before adding generative AI is to examine potential use cases. Then, review the ones that have a high impact and return a measurable value.

Ask yourself:

- What are the tasks that can be enhanced or automated with AI? For example, it might be patient chatbots or automated prior-authorization letters.

- What is the projected ROI? Consider whether AI can reduce expenses, save time, or improve patient outcomes in a quantifiable manner.

👉 Here's an illustration: without well-defined use cases, generative AI projects have the potential to be costly experiments with a limited effect on operations or patient care.

5. Change Management Readiness

When it comes to successful generative AI integration, it depends as much on people as on technology.

Ask yourself:

- Are leadership and key stakeholders committed? Without executive support, AI initiatives can lose momentum.

- Are staff prepared for the switch and trained? The operational and clinical teams need to understand where AI fits into their processes to implement it effectively.

- Do you have a resistance management plan in place?

👉 Here's an example: even the most advanced AI features can fail if staff are hesitant to use them or workflows are disrupted, resulting in delayed ROI and diminished patient benefits.

✒️ Pete Peranzo, Co-founder of Imaginovation, shares, “You want first to get your application ready, make sure it's top-notch before you start really integrating AI. It's probably a lot more beneficial to fix the UI/UX than just sitting there and integrating AI on top of a crappy application.”

Pete highlights that the most common gap preventing healthcare applications from being ready for generative AI is the state of their existing application and its technical architecture.

Specifically, the application needs to be well-built and optimized before integrating AI, as adding AI to a poorly designed app offers limited benefit. Data compliance is also essential, but the foundational issue is ensuring the application's quality and readiness first.

💡 Bottom Line

If you are unprepared with your healthcare app, it risks patient safety, regulatory compliance, and ROI. The best approach is to work on strengthening your app’s data maturity, compliance, architecture, business case, and change management first.

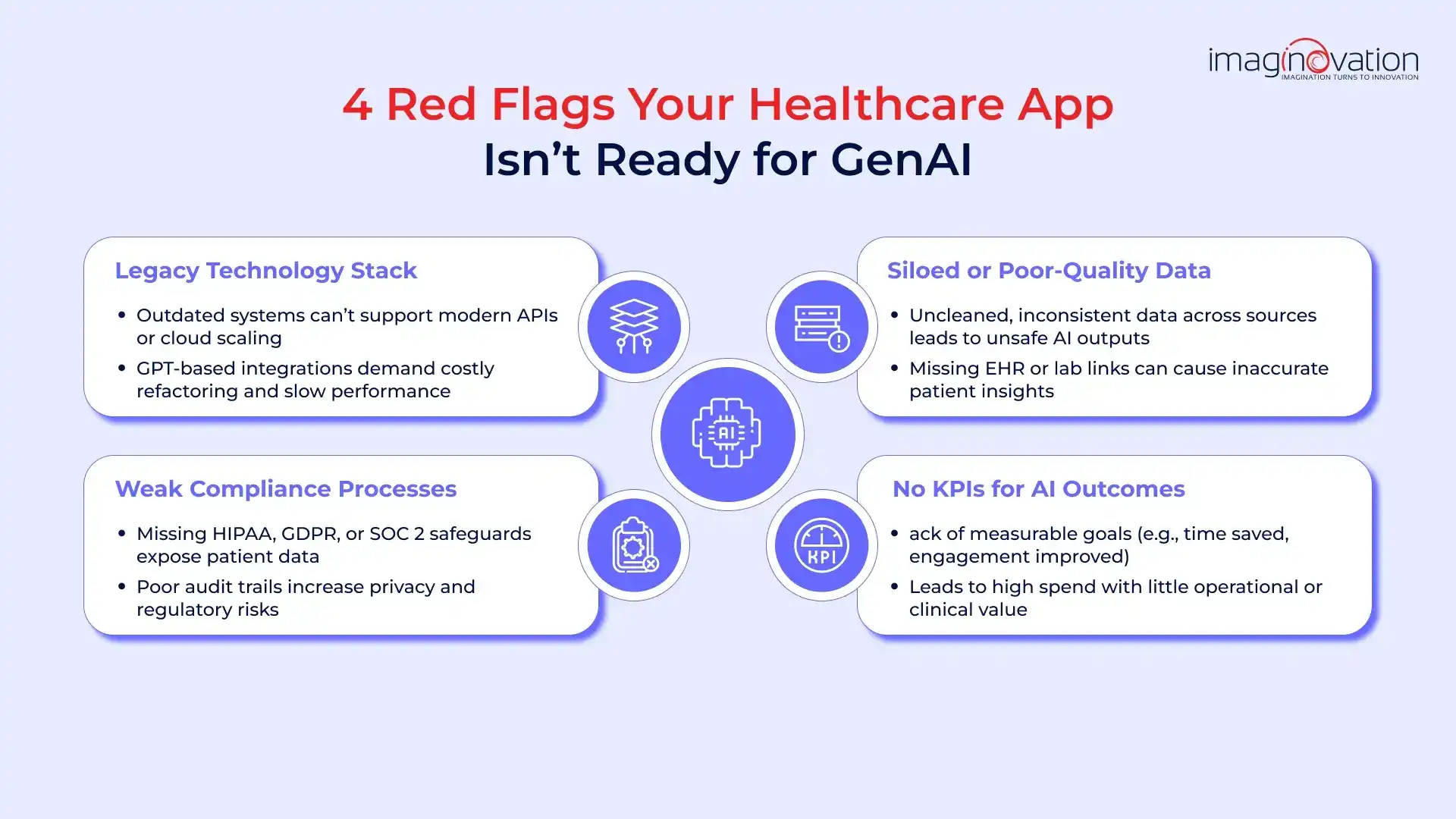

What Are The Red Flags That Show My Healthcare App Isn’t Ready For Generative AI Yet?

On the one hand, there is immense potential for generative AI in healthcare; on the other hand, several difficulties exist.

Thus, before adding generative AI to your healthcare app, it is vital to watch for these warning signs:

1. Legacy Technology Stack

If your platform can't support modern APIs or cloud scaling, it is a major red flag.

In such scenarios, integrating GPT-based services will require significant refactoring and could slow performance or increase costs.

2. Siloed or Poor-quality Data

Often, patient data is stored in unstructured formats, or duplicate entries may be overlooked, leading to inconsistent formats, which can result in unsafe or erroneous AI outcomes.

Therefore, it is vital to avoid the uncensored use of such data, including uncleaned and ununified data across EHRs, labs, imaging, and wearables, which can result in generative models incorrectly interpreting patient conditions.

3. Weak Compliance Processes

AI systems inevitably will handle sensitive patient information, and another major red flag is the lack of HIPAA safeguards, SOC 2 reporting, or GDPR readiness.

In the absence of these safeguards, organizations could be at risk of privacy breaches and regulatory penalties.

4. No KPIs tied to AI outcomes

At times, leadership seems to be chasing innovation, but without defining measurable goals, such as reduced documentation time or improved patient engagement.

In such scenarios, AI investments could drain budgets without delivering value.

✒️ Real-world Caution: It’s noteworthy that several healthcare apps that rushed to launch generative AI chatbots without strong data governance exposed patients to incorrect or incomplete advice, damaging trust and triggering regulatory scrutiny.

Pete Peranzo shares an example of IBM Watson Health, a health-technology division of IBM that rushed into generative AI and faced failure. Despite significant investment of over $5 billion, the platform ultimately failed because it recommended unsafe and incorrect cancer treatments.

The failure was attributed to improper training, which utilized limited patient data rather than real-world data, leading to unsafe recommendations, such as those made at Memorial Sloan Kettering Cancer Center. Pete highlights this example, underscoring the risks of implementing AI without adequate research and proper data training.

Bottom Line: It is essential to identify and address red flags to ensure a robust healthcare app.

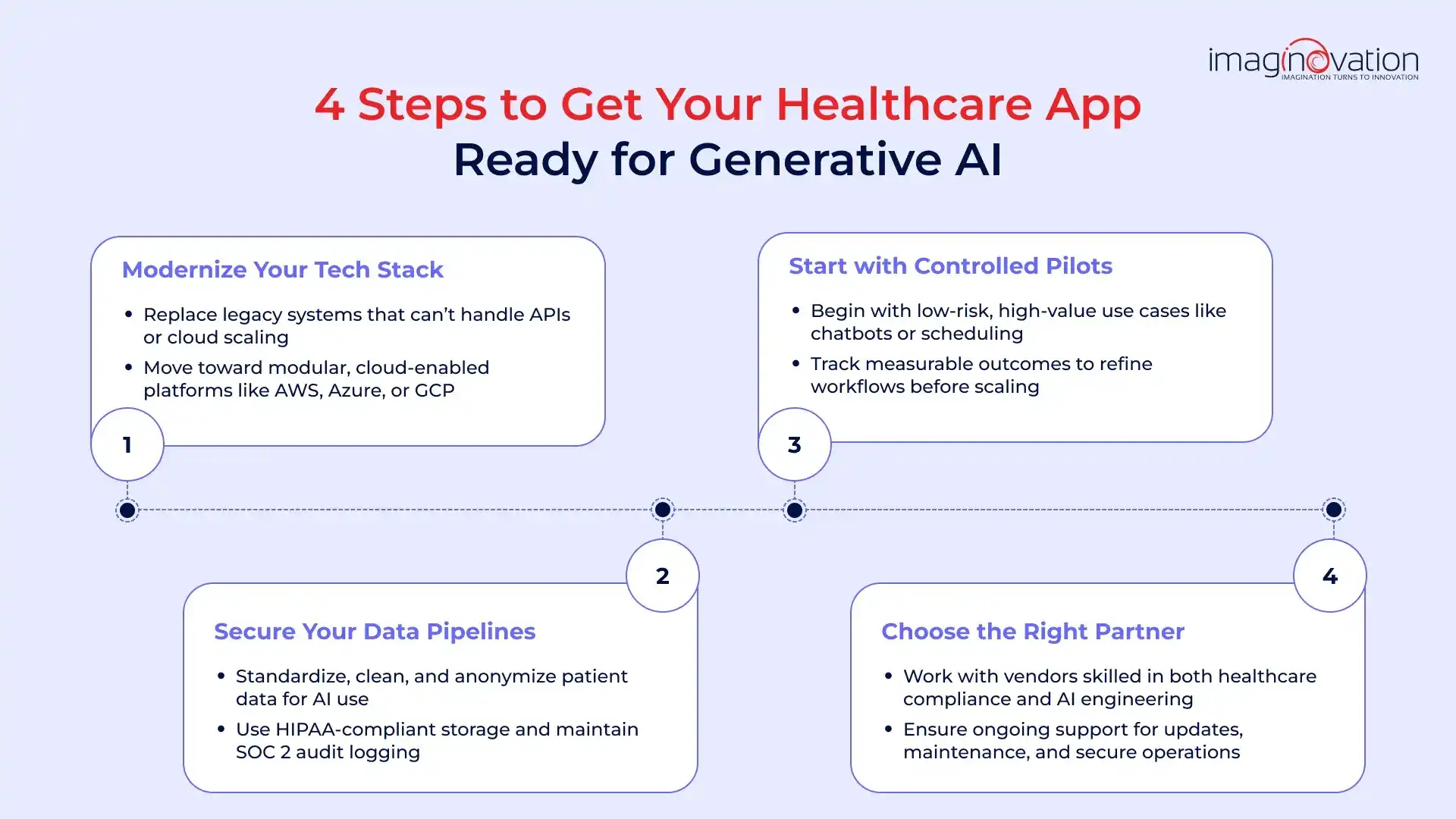

How Can I Prepare My Healthcare App For Generative AI Integration If It’s Not Ready Yet?

Let’s explore some facets that you can start working on to prepare for the integration process of your healthcare app with generative AI.

1. Modernize Your Tech Stack

Do you have legacy systems? If yes, start identifying components that cannot support modern APIs.

These systems struggle to cope with AI workloads or cloud scaling. Once identified, start incrementally modularizing or refactoring these systems.

The step will help the systems call AI APIs independently without disrupting core functionalities. Another aspect to consider is cloud enablement.

Here, you can check out apt platforms like AWS, Azure, or GCP, which support your organization with scalable infrastructure and AI-ready services.

2. Secure Your Data Pipelines

It is crucial to begin developing a robust data governance strategy. In this regard, the first steps will include standardizing the way data is gathered. Begin with standard means of storing and accessing data throughout your application.

In addition, to reduce compliance risks, de-identification and anonymization of patient data must be performed wherever possible before AI models use it.

It is a good idea to implement a system based on HIPAA-compliant storage solutions. Remember to work around upholding SOC 2 standards with adequate audit logging.

Additionally, it is necessary to monitor and verify the authenticity of data, as this helps identify errors or inconsistencies that can lead to unsafe or inaccurate AI output.

3. Start with Controlled Pilots

Begin AI adoption by choosing areas that are low-risk and offer high value, such as automating patient communication, scheduling, or frequently asked questions.

It is advisable not to begin with high-stakes clinical decision-making functions, which can be done after you become experienced with AI outputs.

Don't forget to monitor quantifiable effects, such as time recovered, patient involvement, or error reduction, which will be used to estimate the value of AI intervention. The information can also help streamline AI prompts, workflows, and model selection before scaling to clinical use.

4. Choose the Right Partner

Although there are numerous vendors, it is advisable to collaborate with one that specializes and has expertise in handling both healthcare compliance and generative AI engineering. Ideally, your partner should be risk-conscious.

They should be aware of regulations about HIPAA, GDPR (if applicable), and FDA rules for software as a medical device.

Your potential partner should be able to facilitate scaling AI integration throughout your app ecosystem. Partners who can provide continuous updates, maintenance, and monitoring for secure, compliant operations can be invaluable.

💡 Bottom Line

A robust healthcare app that is well-integrated with generative AI requires significant preparation and planning.

The areas that you need to focus on include modernizing legacy tech, securing and governing data, and piloting low-risk AI use cases.

It is also vital to partner with experts who understand both healthcare compliance and AI engineering.

How Do I Make the Business Case For Generative AI Integration To My Board or Investors?

It is essential to focus on aspects that will interest your board or investors. Here are some ROI scenarios for you:

1. Cost Reductions

- Clinical Intake Automation: Generative AI can generate structured visit summaries from inputs, including patient conversations and uploaded forms.

For example, some early pilots have demonstrated the potential to save over 500 staff hours per month, which in turn can result in a 20% annual reduction in operating costs.

- Claims and Coding Support: Manual review time and denials can be cut with ICD/CPT codes and pre-filled claims.

- 24/7 Patient Messaging: AI triages can handle routine questions, which can reduce call-center FTE requirements.

2. New Revenue Streams

- Premium Patient Engagement Tools: Patients can easily access advanced AI features through various subscription tiers, which include symptom checkers, personalized care plans, and medication adherence reminders. The tools offer valuable support to patients, which in turn increases loyalty and creates recurring monthly or annual income.

- Enterprise Licenses: You can offer entities, such as telehealth networks, urgent-care chains, or hospitals, a ready-to-go AI intake and documentation solution under your own brand. This can create a scalable revenue stream by licensing these modules on a per-user basis.

- Data and Analytics Services: Another aspect to consider is providing access to de-identified trend reports for payers or life-science research partners.

3. Competitive Differentiation

- Faster Market Capture: As your healthcare app is also first to the marketplace with HIPAA-compliant generative capabilities, it can differentiate your app from conventional telehealth competition.

- Stickier Ecosystem: With generative AI, you can take advantage of personalized interactions (smart follow-ups, chatbots) that will assist in driving retention and lifetime value.

4. Risk-Mitigation & Rollout Plan

- Compliance-First: It is a breeze to address compliance, including HIPAA, SOC 2, and local data residency, because the rules are built in from the design.

- Phased Pilots: You have the option to start with a low-risk approach, which is highly recommended. Next, move to high-ROI areas (intake summaries, FAQ automation) before expanding to clinical decision support.

- Continuous Monitoring: Additional benefits include model-accuracy audits, bias checks, and real-time security alerts.

💡 Bottom Line for the Board / Investors:

- 20%+ annual OPEX savings from automation.

- Upsell potential through premium patient tools and B2B licensing.

- Defensible moat as an early mover in AI-powered healthcare apps.

What’s the Next Step If My Healthcare App Isn’t Fully Ready For Generative AI?

Don’t Abandon AI, Build a Readiness Roadmap

Having a clear roadmap for your healthcare app integrating with generative AI can help. Therefore, it is best not to shelve your plans for generative AI.

Start with a readiness audit; it will give an idea of the gaps that you may consider closing before a pilot begins.

While auditing, evaluate regulatory compliance (including HIPAA, SOC 2, and local data-residency rules), data maturity and quality, and technical architecture (such as API readiness, modularity, and scalability). Remember to examine security controls and clinical governance requirements.

Stage 1: Readiness

Begin by closing critical gaps, which may include implementing encrypted data storage or establishing robust data pipelines.

Implement governance rules and establish a secure testing environment, which enables experimentation while maintaining patient data privacy and compliance with regulations.

Stage 2: Pilot

Run a tightly scoped pilot, focusing on a low-risk, high-impact case, such as automating intake summaries or patient FAQ triage. During this stage, track predefined success metrics.

Additionally, monitor model performance, security, and compliance in real-time.

Stage 3: Proof of Value

Once the pilot shows measurable outcomes, for instance, staff hours saved or improved documentation accuracy, you can consider expanding the trial and quantifying ROI.

The results can help you secure executive and compliance sign-offs, as well as ensure leadership buy-in for broader adoption.

Stage 4: Scaled Integration

Finally, connect the solution that has exhibited results to the EHR and billing systems.

Remember to operationalize MLOps, train staff, and implement continuous auditing and incident-response protocols. This final phase ensures the AI capability is production-ready and resilient.

✒️ Pete Peranzo highlights that Imaginovation assists healthcare companies in moving beyond pilot projects and scaling generative AI safely by being involved throughout the entire process — from the initial idea to the launch of a minimum viable product (MVP) and beyond.

The Imaginovation team focuses on building a strong technology foundation that allows for growth and scalability. Rather than just developing a product, they act as an extension of the company, handling the technological aspects and ensuring the infrastructure is robust and suitable for scaling.

Moreover, they emphasize testing, gathering feedback, and expanding the team as needed to support ongoing growth while maintaining safety and efficacy.

💡 Bottom Line

A step-by-step approach reduces clinical and regulatory risk, demonstrating business value upfront. It builds a repeatable process for safe, profitable, long-term generative AI in your healthcare app.

Conclusion

Are you wondering if you are genuinely ready for generative AI? For healthcare leaders seeking answers to this question, Imaginovation can help you find the solution.

We have a structured and sure-fire framework that can help you audit readiness across compliance, technology, and data.

Our team can also help you design pilot use cases that deliver measurable ROI, and scale integrations with regulatory compliance built in from day one.

Don't wait, get ready to schedule a Generative AI Readiness Audit with Imaginovation to move from uncertainty to a clear and actionable roadmap.